- CONFIGURE JBOD IN DISK GENIUS HOW TO

- CONFIGURE JBOD IN DISK GENIUS SERIAL

- CONFIGURE JBOD IN DISK GENIUS DRIVER

- CONFIGURE JBOD IN DISK GENIUS SERIES

- CONFIGURE JBOD IN DISK GENIUS WINDOWS

CONFIGURE JBOD IN DISK GENIUS DRIVER

Storage Spaces that are formatted in ReFS cannot be added to the cluster shared volume (CSV). Take this free intelligence test and see your score instantly Driver for Genius Wireless Mini Navigator Mouse. Means that they cannot be used as a part of other pools. A type with parity control is not supported.ĭisks that are used in a failover pool must be dedicated. Supported simple and mirror types of Storage Spaces. The clustered data space must use a fixed type of resource provision (we are talking about the type of vhd(x) - drive that is used for Storage Spaces). In order to do this: open the Failover Cluster Manager - cluadmin.msc and select the Validate Cluster option. Regardless of whether there is any layer between the OS and disks as RAID-controllers and other disk subsystems or not.Īll physical disks in a clustered pool must successfully pass the validation test in Failover Clustering.

CONFIGURE JBOD IN DISK GENIUS SERIAL

To create Storage Spaces in Failover Cluster mode we need at least 3 physical disks with the size at least 4 Gb for each disk.Īll disks inside Storage Pools must be Serial Attached SCSI (SAS) regardless of whether these disks are directly connected to the system. Ok, what do we have here for configuration: Those are great for building resilient file- / iSCSI- servers.

CONFIGURE JBOD IN DISK GENIUS WINDOWS

There are options in one case, the so-called Cluster-in-a-box (CiB) based on Supermicro Storage Bridge Bay with pre-installed Windows Storage Server 2012 R2 Standard. If there is a 3rd connector it can be used for cascading (connecting additional racks) or for building a topology with 3 nodes in a cluster. Each expander in Supermicro enclosures has at least 2 X4 SAS connectors (SFF-8087 or SFF-8643) that can be used as inputs. The minimum configuration looks like this: 2 servers - in each of them a 2-port SAS HBA with connection to a 2-expander SAS JBOD-based on the Supermicro enclosure and SAS disks.įor use as a SAS JBOD we can use any Supermicro enclosure with 2 expanders (E26 or E2C for SAS2 and SAS3 accordingly).

CONFIGURE JBOD IN DISK GENIUS SERIES

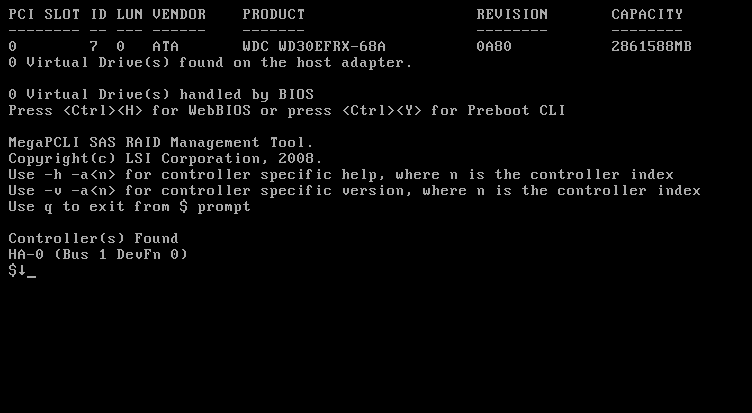

We used LSI (LSI 9207-8e), but you can use Adaptec 6H series and higher. On servers: conventional SAS HBA's are used as controllers. The solution architecture is very simple: SAS disks are required, SAS JBOD with two SAS-expanders and at least two slots for connecting to each of the expanders (for a 2-node cluster). I generally also initialize the partition on the iSCSI device just to make sure it's all clean.One of the most important Storage Spaces' features is using of Windows' failover cluster. Also note that you'll need to potentially change the MTU to 9000 if you want to use jumbo frames, and that will have to match on your network switches, as well.Īnd, indeed, you'd want to use a different volume on the storage device than what you have currently allocated, or as Alan suggests, fist move it elsewhere before reusing the same on the MD unit. Some of this goes a bit on the sidelines, but uch of the basic setup will be the same other than the actual creation of the connections will be done from your XenServers and generally using XenCenter to be able to handle this pool-wide.

CONFIGURE JBOD IN DISK GENIUS HOW TO

There are a few threads along the lines of how to set this all up, test, etc. If I need to go back and reinstall Xenserver again I would but I just wanted to make sure that I don't wipe out the data in the storage array. Is it possible to add this array later as a shared storage? or was I supposed to configure it from the beginning? Sorry I'm super new to Xen environment. However, I keep wondering how I'm going to connect MD3400 in the future, because eventually I would like 3 servers to be in the pool and create HA environment sharing this MD3400 storage array.

I was afraid to break or wipe out the data which is currently in use by 2 other servers. I didn't choose this option because it is currently in use. While installing the Xenserver, it asked me if I want to use MD3400 storage. (I'll do this one by one eventually get all 3 to be Xenserver)

Recently, I stopped one of the R530 servers and installed Xenserver. So I decided to convert everything to Xenserver. They are pacemaker & corosync cluster servers but it keeps failing. I have 3 Dell R530 servers directly connected to storage MD3400.

0 kommentar(er)

0 kommentar(er)